OpenVINO™ を使用した YOLOv7 の変換と最適化¶

この Jupyter ノートブックは、ローカルへのインストール後にのみ起動できます。

YOLOv7 アルゴリズムは、コンピューター・ビジョンとマシンラーニングのコミュニティーに大きな波を起こしています。これは、画像を入力として受け取り、画像内の各オブジェクトの境界ボックスとクラス確率を予測することで、画像認識タスクを実行するリアルタイムのオブジェクト検出アルゴリズムです。

YOLO は “You Only Look Once (一度だけ見る)” の略で、人気のあるリアルタイム・オブジェクト検出アルゴリズムのファミリーです。オリジナルの YOLO オブジェクト検出器は 2016年に初めてリリースされました。以来、YOLO のさまざまなバージョンやバリエーションが提案され、それぞれがパフォーマンスと効率を大幅に向上させています。YOLOv7 は YOLO モデルファミリーの進化の次の段階であり、推論コストを増やすことなく、リアルタイムのオブジェクト検出精度を大幅に向上させます。実現に関する詳細については、オリジナルの論文とリポジトリーを参照してください。

リアルタイムの物体検出は、コンピューター・ビジョン・システムの主要コンポーネントとして使用されます。リアルタイム物体検出モデルを使用するアプリケーションには、ビデオ分析、ロボット工学、自動運転車、複数物体の追跡と物体の計数、医療画像分析などがあります。

このチュートリアルでは、OpenVINO を使用して PyTorch YOLO V7 を実行および最適化する手順を段階的に説明します。

このチュートリアルは次のステップで構成されます。

PyTorch モデルを準備します。

データセットをダウンロードして準備します。

元のモデルを検証します。

PyTorch モデルから ONNX へ変換します。

ONNX モデルを OpenVINO IR に変換します。

変換されたモデルを検証します。

最適化パイプラインを準備して実行します。

FP32 モデルと量子化モデルの精度を比較します。

FP32 モデルと量子化モデルのパフォーマンスを比較します。

目次¶

PyTorch モデルの取得¶

一般に、PyTorch モデルは、モデルの重みを含む状態辞書によって初期化された torch.nn.Module クラスのインスタンスを表します。リポジトリーで入手できる COCO データセットで事前トレーニングされた YOLOv7 小型モデルを使用します。事前トレーニングされたモデルを取得する一般的な手順は次のとおりです。

モデルクラスのインスタンスを作成します。

事前トレーニングされたモデルの重みを含むチェックポイント状態辞書をロードします。

一部の操作を推論モードに切り替えるためモデルを評価に切り替えます。

この場合、モデル作成者は YOLOv7 モデルを ONNX に変換できるツールを提供しているため、これらの手順を手動で実行する必要はありません。

必要条件¶

%pip install -q "openvino>=2023.1.0" "nncf>=2.5.0"

DEPRECATION: pytorch-lightning 1.6.5 has a non-standard dependency specifier torch>=1.8.*. pip 24.1 will enforce this behaviour change. A possible replacement is to upgrade to a newer version of pytorch-lightning or contact the author to suggest that they release a version with a conforming dependency specifiers. Discussion can be found at https://github.com/pypa/pip/issues/12063

Note: you may need to restart the kernel to use updated packages.

import sys

from pathlib import Path

sys.path.append("../utils")

from notebook_utils import download_file

# Clone YOLOv7 repo

if not Path('yolov7').exists():

!git clone https://github.com/WongKinYiu/yolov7

%cd yolov7

Cloning into 'yolov7'...

remote: Enumerating objects: 1197, done.[K

Receiving objects: 0% (1/1197)

Receiving objects: 1% (12/1197)

Receiving objects: 2% (24/1197)

Receiving objects: 3% (36/1197)

Receiving objects: 4% (48/1197)

Receiving objects: 5% (60/1197)

Receiving objects: 6% (72/1197)

Receiving objects: 7% (84/1197)

Receiving objects: 8% (96/1197)

Receiving objects: 9% (108/1197)

Receiving objects: 10% (120/1197)

Receiving objects: 11% (132/1197)

Receiving objects: 12% (144/1197)

Receiving objects: 13% (156/1197)

Receiving objects: 14% (168/1197)

Receiving objects: 15% (180/1197)

Receiving objects: 16% (192/1197)

Receiving objects: 17% (204/1197)

Receiving objects: 18% (216/1197)

Receiving objects: 19% (228/1197)

Receiving objects: 20% (240/1197)

Receiving objects: 21% (252/1197)

Receiving objects: 22% (264/1197)

Receiving objects: 23% (276/1197)

Receiving objects: 24% (288/1197)

Receiving objects: 25% (300/1197)

Receiving objects: 26% (312/1197)

Receiving objects: 26% (322/1197), 3.61 MiB | 3.50 MiB/s

Receiving objects: 27% (324/1197), 3.61 MiB | 3.50 MiB/s

Receiving objects: 27% (334/1197), 7.27 MiB | 3.52 MiB/s

Receiving objects: 27% (334/1197), 10.93 MiB | 3.53 MiB/s

Receiving objects: 28% (336/1197), 10.93 MiB | 3.53 MiB/s

Receiving objects: 28% (338/1197), 14.60 MiB | 3.53 MiB/s

Receiving objects: 28% (339/1197), 18.26 MiB | 3.55 MiB/s

Receiving objects: 28% (339/1197), 21.92 MiB | 3.55 MiB/s

Receiving objects: 28% (340/1197), 23.75 MiB | 3.55 MiB/s

Receiving objects: 28% (343/1197), 29.25 MiB | 3.55 MiB/s

Receiving objects: 28% (345/1197), 31.09 MiB | 3.55 MiB/s

Receiving objects: 28% (347/1197), 34.76 MiB | 3.55 MiB/s

Receiving objects: 29% (348/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 29% (350/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 30% (360/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 31% (372/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 32% (384/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 33% (396/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 34% (407/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 35% (419/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 36% (431/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 37% (443/1197), 38.43 MiB | 3.55 MiB/s

Receiving objects: 38% (455/1197), 40.26 MiB | 3.55 MiB/s

Receiving objects: 39% (467/1197), 40.26 MiB | 3.55 MiB/s

Receiving objects: 40% (479/1197), 40.26 MiB | 3.55 MiB/s

Receiving objects: 41% (491/1197), 40.26 MiB | 3.55 MiB/s

Receiving objects: 42% (503/1197), 40.26 MiB | 3.55 MiB/s

Receiving objects: 43% (515/1197), 40.26 MiB | 3.55 MiB/s

Receiving objects: 44% (527/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 45% (539/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 46% (551/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 47% (563/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 48% (575/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 49% (587/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 50% (599/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 51% (611/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 52% (623/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 53% (635/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 54% (647/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 55% (659/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 56% (671/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 57% (683/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 58% (695/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 58% (700/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 59% (707/1197), 42.10 MiB | 3.55 MiB/s

Receiving objects: 59% (715/1197), 47.60 MiB | 3.55 MiB/s

Receiving objects: 59% (715/1197), 51.26 MiB | 3.55 MiB/s

Receiving objects: 59% (715/1197), 54.93 MiB | 3.55 MiB/s

Receiving objects: 59% (715/1197), 56.75 MiB | 3.55 MiB/s

Receiving objects: 60% (719/1197), 56.75 MiB | 3.55 MiB/s

Receiving objects: 61% (731/1197), 56.75 MiB | 3.55 MiB/s

Receiving objects: 62% (743/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 63% (755/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 64% (767/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 65% (779/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 66% (791/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 67% (802/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 68% (814/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 69% (826/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 70% (838/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 71% (850/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 72% (862/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 73% (874/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 74% (886/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 75% (898/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 76% (910/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 77% (922/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 78% (934/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 79% (946/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 80% (958/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 81% (970/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 82% (982/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 83% (994/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 84% (1006/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 85% (1018/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 86% (1030/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 87% (1042/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 88% (1054/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 89% (1066/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 90% (1078/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 91% (1090/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 92% (1102/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 93% (1114/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 94% (1126/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 95% (1138/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 96% (1150/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 97% (1162/1197), 58.59 MiB | 3.55 MiB/s

Receiving objects: 97% (1172/1197), 60.42 MiB | 3.55 MiB/s

Receiving objects: 97% (1172/1197), 64.08 MiB | 3.55 MiB/s

Receiving objects: 97% (1172/1197), 67.75 MiB | 3.55 MiB/s

Receiving objects: 97% (1172/1197), 71.41 MiB | 3.55 MiB/s

remote: Total 1197 (delta 0), reused 0 (delta 0), pack-reused 1197[K

Receiving objects: 98% (1174/1197), 73.25 MiB | 3.55 MiB/s

Receiving objects: 99% (1186/1197), 73.25 MiB | 3.55 MiB/s

Receiving objects: 100% (1197/1197), 73.25 MiB | 3.55 MiB/s

Receiving objects: 100% (1197/1197), 74.23 MiB | 3.54 MiB/s, done.

Resolving deltas: 0% (0/520)

Resolving deltas: 1% (9/520)

Resolving deltas: 2% (15/520)

Resolving deltas: 3% (17/520)

Resolving deltas: 4% (21/520)

Resolving deltas: 5% (26/520)

Resolving deltas: 6% (32/520)

Resolving deltas: 8% (42/520)

Resolving deltas: 9% (50/520)

Resolving deltas: 10% (52/520)

Resolving deltas: 11% (58/520)

Resolving deltas: 13% (68/520)

Resolving deltas: 14% (73/520)

Resolving deltas: 16% (87/520)

Resolving deltas: 17% (91/520)

Resolving deltas: 21% (113/520)

Resolving deltas: 22% (116/520)

Resolving deltas: 23% (123/520)

Resolving deltas: 26% (140/520)

Resolving deltas: 32% (171/520)

Resolving deltas: 33% (172/520)

Resolving deltas: 34% (181/520)

Resolving deltas: 35% (182/520)

Resolving deltas: 36% (188/520)

Resolving deltas: 38% (202/520)

Resolving deltas: 39% (204/520)

Resolving deltas: 40% (211/520)

Resolving deltas: 48% (252/520)

Resolving deltas: 49% (255/520)

Resolving deltas: 51% (267/520)

Resolving deltas: 52% (271/520)

Resolving deltas: 53% (279/520)

Resolving deltas: 57% (300/520)

Resolving deltas: 66% (345/520)

Resolving deltas: 67% (349/520)

Resolving deltas: 68% (354/520)

Resolving deltas: 69% (361/520)

Resolving deltas: 70% (365/520)

Resolving deltas: 71% (371/520)

Resolving deltas: 72% (375/520)

Resolving deltas: 73% (380/520)

Resolving deltas: 74% (385/520)

Resolving deltas: 75% (394/520)

Resolving deltas: 76% (396/520)

Resolving deltas: 77% (401/520)

Resolving deltas: 78% (406/520)

Resolving deltas: 80% (416/520)

Resolving deltas: 81% (424/520)

Resolving deltas: 83% (436/520)

Resolving deltas: 84% (437/520)

Resolving deltas: 85% (442/520)

Resolving deltas: 86% (450/520)

Resolving deltas: 87% (454/520)

Resolving deltas: 89% (463/520)

Resolving deltas: 90% (469/520)

Resolving deltas: 91% (477/520)

Resolving deltas: 93% (488/520)

Resolving deltas: 94% (489/520)

Resolving deltas: 95% (494/520)

Resolving deltas: 96% (502/520)

Resolving deltas: 97% (507/520)

Resolving deltas: 98% (514/520)

Resolving deltas: 99% (515/520)

Resolving deltas: 100% (520/520)

Resolving deltas: 100% (520/520), done.

/opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-609/.workspace/scm/ov-notebook/notebooks/226-yolov7-optimization/yolov7

# Download pre-trained model weights

MODEL_LINK = "https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.pt"

DATA_DIR = Path("data/")

MODEL_DIR = Path("model/")

MODEL_DIR.mkdir(exist_ok=True)

DATA_DIR.mkdir(exist_ok=True)

download_file(MODEL_LINK, directory=MODEL_DIR, show_progress=True)

model/yolov7-tiny.pt: 0%| | 0.00/12.1M [00:00<?, ?B/s]

PosixPath('/opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-609/.workspace/scm/ov-notebook/notebooks/226-yolov7-optimization/yolov7/model/yolov7-tiny.pt')

モデル推論を確認¶

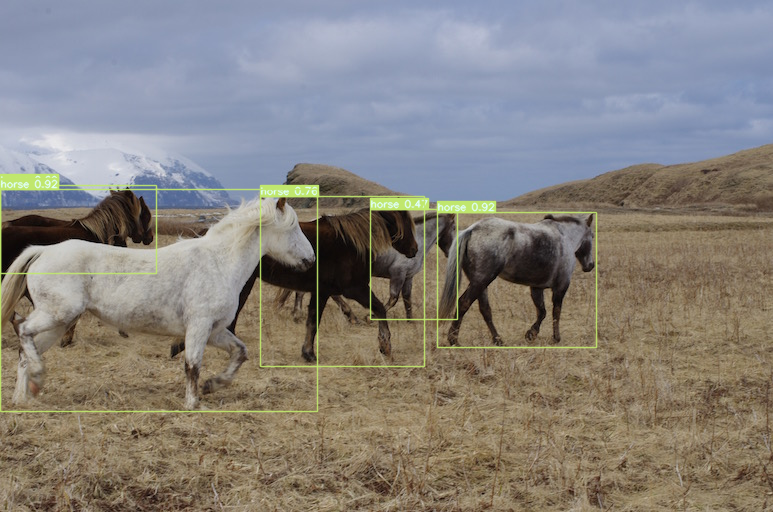

detect.py スクリプトは PyTorch モデル推論を実行し、結果として画像を保存します。

!python -W ignore detect.py --weights model/yolov7-tiny.pt --conf 0.25 --img-size 640 --source inference/images/horses.jpg

Namespace(agnostic_nms=False, augment=False, classes=None, conf_thres=0.25, device='', exist_ok=False, img_size=640, iou_thres=0.45, name='exp', no_trace=False, nosave=False, project='runs/detect', save_conf=False, save_txt=False, source='inference/images/horses.jpg', update=False, view_img=False, weights=['model/yolov7-tiny.pt'])

YOLOR 🚀 v0.1-128-ga207844 torch 1.13.1+cpu CPU

Fusing layers...

Model Summary: 200 layers, 6219709 parameters, 229245 gradients

Convert model to Traced-model...

traced_script_module saved!

model is traced!

5 horses, Done. (70.4ms) Inference, (0.8ms) NMS

The image with the result is saved in: runs/detect/exp/horses.jpg

Done. (0.084s)

from PIL import Image

# visualize prediction result

Image.open('runs/detect/exp/horses.jpg')

ONNX へエクスポート¶

モデルの ONNX 形式をエクスポートするには、export.py スクリプトを使用します。引数を確認します。

!python export.py --help

Import onnx_graphsurgeon failure: No module named 'onnx_graphsurgeon'

usage: export.py [-h] [--weights WEIGHTS] [--img-size IMG_SIZE [IMG_SIZE ...]]

[--batch-size BATCH_SIZE] [--dynamic] [--dynamic-batch]

[--grid] [--end2end] [--max-wh MAX_WH] [--topk-all TOPK_ALL]

[--iou-thres IOU_THRES] [--conf-thres CONF_THRES]

[--device DEVICE] [--simplify] [--include-nms] [--fp16]

[--int8]

optional arguments:

-h, --help show this help message and exit

--weights WEIGHTS weights path

--img-size IMG_SIZE [IMG_SIZE ...]

image size

--batch-size BATCH_SIZE

batch size

--dynamic dynamic ONNX axes

--dynamic-batch dynamic batch onnx for tensorrt and onnx-runtime

--grid export Detect() layer grid

--end2end export end2end onnx

--max-wh MAX_WH None for tensorrt nms, int value for onnx-runtime nms

--topk-all TOPK_ALL topk objects for every images

--iou-thres IOU_THRES

iou threshold for NMS

--conf-thres CONF_THRES

conf threshold for NMS

--device DEVICE cuda device, i.e. 0 or 0,1,2,3 or cpu

--simplify simplify onnx model

--include-nms export end2end onnx

--fp16 CoreML FP16 half-precision export

--int8 CoreML INT8 quantization

最も重要なパラメーター:

--weights- モデル重みチェックポイントへのパス--img-size- onnx トレースの入力画像のサイズ

PyTorch から ONNX モデルをエクスポートする際に、モデルに後処理結果を含める構成可能なパラメーターを設定することがあります。

--end2end- 後処理を含む完全なモデルを onnx にエクスポート--grid- 検出レイヤーをモデルの一部としてエクスポート--topk-all- すべての画像の上位 k 要素--iou-thres- NMS の結合しきい値を超える交点--conf-thres- 最小信頼しきい値--max-wh- NMS の境界ボックスの最大幅と高さ

モデルに後処理全体を含めると、よりパフォーマンスの高い結果を実現できますが、同時にモデルの柔軟性が低下し、完全な精度の再現性が保証されなくなります。元の pytorch モデルの結果形式を保持するのに --grid パラメーターのみを追加するのはそのためです。end2end ONNX モデルの操作方法を理解したい場合は、このノートブックを確認してください。

!python -W ignore export.py --weights model/yolov7-tiny.pt --grid

Import onnx_graphsurgeon failure: No module named 'onnx_graphsurgeon'

Namespace(batch_size=1, conf_thres=0.25, device='cpu', dynamic=False, dynamic_batch=False, end2end=False, fp16=False, grid=True, img_size=[640, 640], include_nms=False, int8=False, iou_thres=0.45, max_wh=None, simplify=False, topk_all=100, weights='model/yolov7-tiny.pt')

YOLOR 🚀 v0.1-128-ga207844 torch 1.13.1+cpu CPU

Fusing layers...

Model Summary: 200 layers, 6219709 parameters, 6219709 gradients

Starting TorchScript export with torch 1.13.1+cpu...

TorchScript export success, saved as model/yolov7-tiny.torchscript.pt

CoreML export failure: No module named 'coremltools'

Starting TorchScript-Lite export with torch 1.13.1+cpu...

TorchScript-Lite export success, saved as model/yolov7-tiny.torchscript.ptl

Starting ONNX export with onnx 1.15.0...

ONNX export success, saved as model/yolov7-tiny.onnx

Export complete (2.36s). Visualize with https://github.com/lutzroeder/netron.

ONNX モデルを OpenVINO 中間表現 (IR) に変換¶

ONNX モデルは OpenVINO ランタイムによって直接サポートされていますが、OpenVINO モデル変換 API 機能を活用するには、それらを IR 形式に変換すると便利です。モデル変換 API の ov.convert_model Python 関数を使用してモデルを変換できます。この関数は、Python インターフェイスで使用できる OpenVINO モデルクラスのインスタンスを返します。ただし、将来の実行のために、ov.save_model を使用して OpenVINO IR 形式でデバイスに保存することもできます。

import openvino as ov

model = ov.convert_model('model/yolov7-tiny.onnx')

# serialize model for saving IR

ov.save_model(model, 'model/yolov7-tiny.xml')

モデルの推論を検証¶

モデルの動作をテストするため、detect.py に似た推論パイプラインを作成します。パイプラインは、前処理ステップ、OpenVINO モデルの推論、および境界ボックスを取得する結果の後処理で構成されます。

前処理¶

モデル入力は [1, 3, 640, 640] の形状を持つ N, C, H, W 形式のテンソルです。

説明:

N- バッチ内の画像数 (バッチサイズ)C- 画像チャネルH- 画像の髙さW- 画像の幅

モデルは、RGB チャネル形式で [0, 1] の範囲で正規化された画像を想定しています。モデルのサイズに合わせて画像のサイズを変更するには、幅と高さのアスペクト比が維持されるレターボックスのサイズ変更アプローチが使用されます。yolov7 リポジトリーで定義されています。

特定の形状を維持するため、前処理によってパディングが自動的に有効になります。

import numpy as np

import torch

from PIL import Image

from utils.datasets import letterbox

from utils.plots import plot_one_box

def preprocess_image(img0: np.ndarray):

"""

Preprocess image according to YOLOv7 input requirements.

Takes image in np.array format, resizes it to specific size using letterbox resize, converts color space from BGR (default in OpenCV) to RGB and changes data layout from HWC to CHW.

Parameters:

img0 (np.ndarray): image for preprocessing

Returns:

img (np.ndarray): image after preprocessing

img0 (np.ndarray): original image

"""

# resize

img = letterbox(img0, auto=False)[0]

# Convert

img = img.transpose(2, 0, 1)

img = np.ascontiguousarray(img)

return img, img0

def prepare_input_tensor(image: np.ndarray):

"""

Converts preprocessed image to tensor format according to YOLOv7 input requirements.

Takes image in np.array format with unit8 data in [0, 255] range and converts it to torch.Tensor object with float data in [0, 1] range

Parameters:

image (np.ndarray): image for conversion to tensor

Returns:

input_tensor (torch.Tensor): float tensor ready to use for YOLOv7 inference

"""

input_tensor = image.astype(np.float32) # uint8 to fp16/32

input_tensor /= 255.0 # 0 - 255 to 0.0 - 1.0

if input_tensor.ndim == 3:

input_tensor = np.expand_dims(input_tensor, 0)

return input_tensor

# label names for visualization

DEFAULT_NAMES = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush']

# obtain class names from model checkpoint

state_dict = torch.load("model/yolov7-tiny.pt", map_location="cpu")

if hasattr(state_dict["model"], "module"):

NAMES = getattr(state_dict["model"].module, "names", DEFAULT_NAMES)

else:

NAMES = getattr(state_dict["model"], "names", DEFAULT_NAMES)

del state_dict

# colors for visualization

COLORS = {name: [np.random.randint(0, 255) for _ in range(3)]

for i, name in enumerate(NAMES)}

後処理¶

モデル出力には検出ボックスの候補が含まれます。これは、B, N, 85 形式の [1,25200,85] の形状を持つテンソルです。

説明:

B- バッチサイズN- 検出ボックスの数

検出ボックスの形式は [x, y, h, w, box_score, class_no_1, …, class_no_80] です。

説明:

(

x,y) - ボックス中心の生座標h、w- ボックスの生の高さと幅box_score- 検出ボックスの信頼度class_no_1, …,class_no_80- クラス全体の確率分布

最終的な予測を得るには、非最大抑制アルゴリズムを適用し、ボックスの座標を元の画像サイズに再スケールする必要があります。

from typing import List, Tuple, Dict

from utils.general import scale_coords, non_max_suppression

def detect(model: ov.Model, image_path: Path, conf_thres: float = 0.25, iou_thres: float = 0.45, classes: List[int] = None, agnostic_nms: bool = False):

"""

OpenVINO YOLOv7 model inference function. Reads image, preprocess it, runs model inference and postprocess results using NMS.

Parameters:

model (Model): OpenVINO compiled model.

image_path (Path): input image path.

conf_thres (float, *optional*, 0.25): minimal accpeted confidence for object filtering

iou_thres (float, *optional*, 0.45): minimal overlap score for remloving objects duplicates in NMS

classes (List[int], *optional*, None): labels for prediction filtering, if not provided all predicted labels will be used

agnostic_nms (bool, *optiona*, False): apply class agnostinc NMS approach or not

Returns:

pred (List): list of detections with (n,6) shape, where n - number of detected boxes in format [x1, y1, x2, y2, score, label]

orig_img (np.ndarray): image before preprocessing, can be used for results visualization

inpjut_shape (Tuple[int]): shape of model input tensor, can be used for output rescaling

"""

output_blob = model.output(0)

img = np.array(Image.open(image_path))

preprocessed_img, orig_img = preprocess_image(img)

input_tensor = prepare_input_tensor(preprocessed_img)

predictions = torch.from_numpy(model(input_tensor)[output_blob])

pred = non_max_suppression(predictions, conf_thres, iou_thres, classes=classes, agnostic=agnostic_nms)

return pred, orig_img, input_tensor.shape

def draw_boxes(predictions: np.ndarray, input_shape: Tuple[int], image: np.ndarray, names: List[str], colors: Dict[str, int]):

"""

Utility function for drawing predicted bounding boxes on image

Parameters:

predictions (np.ndarray): list of detections with (n,6) shape, where n - number of detected boxes in format [x1, y1, x2, y2, score, label]

image (np.ndarray): image for boxes visualization

names (List[str]): list of names for each class in dataset

colors (Dict[str, int]): mapping between class name and drawing color

Returns:

image (np.ndarray): box visualization result

"""

if not len(predictions):

return image

# Rescale boxes from input size to original image size

predictions[:, :4] = scale_coords(input_shape[2:], predictions[:, :4], image.shape).round()

# Write results

for *xyxy, conf, cls in reversed(predictions):

label = f'{names[int(cls)]} {conf:.2f}'

plot_one_box(xyxy, image, label=label, color=colors[names[int(cls)]], line_thickness=1)

return image

core = ov.Core()

# read converted model

model = core.read_model('model/yolov7-tiny.xml')

推論デバイスの選択¶

OpenVINO を使用して推論を実行するためにドロップダウン・リストからデバイスを選択します。

import ipywidgets as widgets

device = widgets.Dropdown(

options=core.available_devices + ["AUTO"],

value='AUTO',

description='Device:',

disabled=False,

)

device

Dropdown(description='Device:', index=1, options=('CPU', 'AUTO'), value='AUTO')

# load model on CPU device

compiled_model = core.compile_model(model, device.value)

boxes, image, input_shape = detect(compiled_model, 'inference/images/horses.jpg')

image_with_boxes = draw_boxes(boxes[0], input_shape, image, NAMES, COLORS)

# visualize results

Image.fromarray(image_with_boxes)

モデルの精度を検証¶

データセットのダウンロード¶

YOLOv7 tiny は COCO データセットで事前トレーニングされているため、モデルの精度を評価するには、ダウンロードする必要があります。YOLOv7 リポジトリーで提供されている指示に従って、元のモデル評価スクリプトで使用するため、モデルの作成者が使用した形式でアノテーションをダウンロードする必要もあります。

from zipfile import ZipFile

sys.path.append("../../utils")

from notebook_utils import download_file

DATA_URL = "http://images.cocodataset.org/zips/val2017.zip"

LABELS_URL = "https://github.com/ultralytics/yolov5/releases/download/v1.0/coco2017labels-segments.zip"

OUT_DIR = Path('.')

download_file(DATA_URL, directory=OUT_DIR, show_progress=True)

download_file(LABELS_URL, directory=OUT_DIR, show_progress=True)

if not (OUT_DIR / "coco/labels").exists():

with ZipFile('coco2017labels-segments.zip' , "r") as zip_ref:

zip_ref.extractall(OUT_DIR)

with ZipFile('val2017.zip' , "r") as zip_ref:

zip_ref.extractall(OUT_DIR / 'coco/images')

val2017.zip: 0%| | 0.00/778M [00:00<?, ?B/s]

coco2017labels-segments.zip: 0%| | 0.00/169M [00:00<?, ?B/s]

データローダーを作成¶

from collections import namedtuple

import yaml

from utils.datasets import create_dataloader

from utils.general import check_dataset, box_iou, xywh2xyxy, colorstr

# read dataset config

DATA_CONFIG = 'data/coco.yaml'

with open(DATA_CONFIG) as f:

data = yaml.load(f, Loader=yaml.SafeLoader)

# Dataloader

TASK = 'val' # path to train/val/test images

Option = namedtuple('Options', ['single_cls']) # imitation of commandline provided options for single class evaluation

opt = Option(False)

dataloader = create_dataloader(

data[TASK], 640, 1, 32, opt, pad=0.5,

prefix=colorstr(f'{TASK}: ')

)[0]

Scanning images: 0%| | 0/5000 [00:00<?, ?it/s]

val: Scanning 'coco/val2017' images and labels... 292 found, 1 missing, 0 empty, 0 corrupted: 6%|▌ | 293/5000 [00:00<00:01, 2923.29it/s]

val: Scanning 'coco/val2017' images and labels... 580 found, 6 missing, 0 empty, 0 corrupted: 12%|█▏ | 586/5000 [00:00<00:01, 2924.54it/s]

val: Scanning 'coco/val2017' images and labels... 871 found, 8 missing, 0 empty, 0 corrupted: 18%|█▊ | 879/5000 [00:00<00:01, 2922.27it/s]

val: Scanning 'coco/val2017' images and labels... 1170 found, 10 missing, 0 empty, 0 corrupted: 24%|██▎ | 1180/5000 [00:00<00:01, 2955.18it/s]

val: Scanning 'coco/val2017' images and labels... 1466 found, 10 missing, 0 empty, 0 corrupted: 30%|██▉ | 1476/5000 [00:00<00:01, 2934.54it/s]

val: Scanning 'coco/val2017' images and labels... 1756 found, 14 missing, 0 empty, 0 corrupted: 35%|███▌ | 1770/5000 [00:00<00:01, 2776.60it/s]

val: Scanning 'coco/val2017' images and labels... 2049 found, 16 missing, 0 empty, 0 corrupted: 41%|████▏ | 2065/5000 [00:00<00:01, 2829.21it/s]

val: Scanning 'coco/val2017' images and labels... 2351 found, 22 missing, 0 empty, 0 corrupted: 47%|████▋ | 2373/5000 [00:00<00:00, 2905.88it/s]

val: Scanning 'coco/val2017' images and labels... 2641 found, 24 missing, 0 empty, 0 corrupted: 53%|█████▎ | 2665/5000 [00:00<00:00, 2898.47it/s]

val: Scanning 'coco/val2017' images and labels... 2938 found, 28 missing, 0 empty, 0 corrupted: 59%|█████▉ | 2966/5000 [00:01<00:00, 2930.42it/s]

val: Scanning 'coco/val2017' images and labels... 3236 found, 31 missing, 0 empty, 0 corrupted: 65%|██████▌ | 3267/5000 [00:01<00:00, 2952.50it/s]

val: Scanning 'coco/val2017' images and labels... 3529 found, 34 missing, 0 empty, 0 corrupted: 71%|███████▏ | 3563/5000 [00:01<00:00, 2951.52it/s]

val: Scanning 'coco/val2017' images and labels... 3824 found, 35 missing, 0 empty, 0 corrupted: 77%|███████▋ | 3859/5000 [00:01<00:00, 2947.45it/s]

val: Scanning 'coco/val2017' images and labels... 4117 found, 39 missing, 0 empty, 0 corrupted: 83%|████████▎ | 4156/5000 [00:01<00:00, 2953.34it/s]

val: Scanning 'coco/val2017' images and labels... 4413 found, 42 missing, 0 empty, 0 corrupted: 89%|████████▉ | 4455/5000 [00:01<00:00, 2962.81it/s]

val: Scanning 'coco/val2017' images and labels... 4709 found, 46 missing, 0 empty, 0 corrupted: 95%|█████████▌| 4755/5000 [00:01<00:00, 2971.05it/s]

val: Scanning 'coco/val2017' images and labels... 4952 found, 48 missing, 0 empty, 0 corrupted: 100%|██████████| 5000/5000 [00:01<00:00, 2932.19it/s]

評価関数を定義¶

この場合、YOLOv7 リポジトリーで提供されている検証メトリックを変更して再利用します (余分な手順を削除)。元のモデル評価手順はこのファイルにあります

import numpy as np

from tqdm.notebook import tqdm

from utils.metrics import ap_per_class

from openvino.runtime import Tensor

def test(data,

model: ov.Model,

dataloader: torch.utils.data.DataLoader,

conf_thres: float = 0.001,

iou_thres: float = 0.65, # for NMS

single_cls: bool = False,

v5_metric: bool = False,

names: List[str] = None,

num_samples: int = None

):

"""

YOLOv7 accuracy evaluation. Processes validation dataset and compites metrics.

Parameters:

model (ov.Model): OpenVINO compiled model.

dataloader (torch.utils.DataLoader): validation dataset.

conf_thres (float, *optional*, 0.001): minimal confidence threshold for keeping detections

iou_thres (float, *optional*, 0.65): IOU threshold for NMS

single_cls (bool, *optional*, False): class agnostic evaluation

v5_metric (bool, *optional*, False): use YOLOv5 evaluation approach for metrics calculation

names (List[str], *optional*, None): names for each class in dataset

num_samples (int, *optional*, None): number samples for testing

Returns:

mp (float): mean precision

mr (float): mean recall

map50 (float): mean average precision at 0.5 IOU threshold

map (float): mean average precision at 0.5:0.95 IOU thresholds

maps (Dict(int, float): average precision per class

seen (int): number of evaluated images

labels (int): number of labels

"""

model_output = model.output(0)

check_dataset(data) # check

nc = 1 if single_cls else int(data['nc']) # number of classes

iouv = torch.linspace(0.5, 0.95, 10) # iou vector for mAP@0.5:0.95

niou = iouv.numel()

if v5_metric:

print("Testing with YOLOv5 AP metric...")

seen = 0

p, r, mp, mr, map50, map = 0., 0., 0., 0., 0., 0.

stats, ap, ap_class = [], [], []

for sample_id, (img, targets, _, shapes) in enumerate(tqdm(dataloader)):

if num_samples is not None and sample_id == num_samples:

break

img = prepare_input_tensor(img.numpy())

targets = targets

height, width = img.shape[2:]

with torch.no_grad():

# Run model

out = torch.from_numpy(model(Tensor(img))[model_output]) # inference output

# Run NMS

targets[:, 2:] *= torch.Tensor([width, height, width, height]) # to pixels

out = non_max_suppression(out, conf_thres=conf_thres, iou_thres=iou_thres, labels=None, multi_label=True)

# Statistics per image

for si, pred in enumerate(out):

labels = targets[targets[:, 0] == si, 1:]

nl = len(labels)

tcls = labels[:, 0].tolist() if nl else [] # target class

seen += 1

if len(pred) == 0:

if nl:

stats.append((torch.zeros(0, niou, dtype=torch.bool), torch.Tensor(), torch.Tensor(), tcls))

continue

# Predictions

predn = pred.clone()

scale_coords(img[si].shape[1:], predn[:, :4], shapes[si][0], shapes[si][1]) # native-space pred

# Assign all predictions as incorrect

correct = torch.zeros(pred.shape[0], niou, dtype=torch.bool, device='cpu')

if nl:

detected = [] # target indices

tcls_tensor = labels[:, 0]

# target boxes

tbox = xywh2xyxy(labels[:, 1:5])

scale_coords(img[si].shape[1:], tbox, shapes[si][0], shapes[si][1]) # native-space labels

# Per target class

for cls in torch.unique(tcls_tensor):

ti = (cls == tcls_tensor).nonzero(as_tuple=False).view(-1) # prediction indices

pi = (cls == pred[:, 5]).nonzero(as_tuple=False).view(-1) # target indices

# Search for detections

if pi.shape[0]:

# Prediction to target ious

ious, i = box_iou(predn[pi, :4], tbox[ti]).max(1) # best ious, indices

# Append detections

detected_set = set()

for j in (ious > iouv[0]).nonzero(as_tuple=False):

d = ti[i[j]] # detected target

if d.item() not in detected_set:

detected_set.add(d.item())

detected.append(d)

correct[pi[j]] = ious[j] > iouv # iou_thres is 1xn

if len(detected) == nl: # all targets already located in image

break

# Append statistics (correct, conf, pcls, tcls)

stats.append((correct.cpu(), pred[:, 4].cpu(), pred[:, 5].cpu(), tcls))

# Compute statistics

stats = [np.concatenate(x, 0) for x in zip(*stats)] # to numpy

if len(stats) and stats[0].any():

p, r, ap, f1, ap_class = ap_per_class(*stats, plot=True, v5_metric=v5_metric, names=names)

ap50, ap = ap[:, 0], ap.mean(1) # AP@0.5, AP@0.5:0.95

mp, mr, map50, map = p.mean(), r.mean(), ap50.mean(), ap.mean()

nt = np.bincount(stats[3].astype(np.int64), minlength=nc) # number of targets per class

else:

nt = torch.zeros(1)

maps = np.zeros(nc) + map

for i, c in enumerate(ap_class):

maps[c] = ap[i]

return mp, mr, map50, map, maps, seen, nt.sum()

検証機能は、次の精度メトリックのリストを報告します。

Precisionは、関連するオブジェクトのみを識別するモデルの正確さの度合いです。Recallは、モデルがすべてのグラウンド・トゥルース・オブジェクトを検出する能力を測定します。mAP@t- 平均精度は、データセット内のすべてのクラスにわたって集計された適合率と再現率曲線下の領域として表されます。ここで、tは 交差ユニオン (IOU) しきい値、つまり、グラウンドトゥルースと予測されたオブジェクト間の重複の度合いです。したがって、mAP@.5は、平均精度が 0.5 IOU しきい値で計算されたことを示します。mAP@.5:.95は、ステップ 0.05 で 0.5 から 0.95 までの範囲の IOU しきい値で計算されます。

mp, mr, map50, map, maps, num_images, labels = test(data=data, model=compiled_model, dataloader=dataloader, names=NAMES)

# Print results

s = ('%20s' + '%12s' * 6) % ('Class', 'Images', 'Labels', 'Precision', 'Recall', 'mAP@.5', 'mAP@.5:.95')

print(s)

pf = '%20s' + '%12i' * 2 + '%12.3g' * 4 # print format

print(pf % ('all', num_images, labels, mp, mr, map50, map))

0%| | 0/5000 [00:00<?, ?it/s]

Class Images Labels Precision Recall mAP@.5 mAP@.5:.95

all 5000 36335 0.651 0.507 0.544 0.359

NNCF トレーニング後の量子化 API を使用してモデルを最適化¶

NNCF は、精度の低下を最小限に抑えながら、OpenVINO でニューラル・ネットワーク推論を最適化する一連の高度なアルゴリズムを提供します。YOLOv7 を最適化するため、ポストトレーニング・モード (微調整パイプラインなし) で 8 ビット量子化を使用します。

注: NNCF トレーニング後の量子化は、OpenVINO 2022.3 リリースのプレビュー機能として利用できます。次のリリースでは完全な機能サポートが提供される予定です。

最適化プロセスには次の手順が含まれます。

量子化用のデータセットを作成します。

nncf.quantizeを実行して、最適化されたモデルを取得します。openvino.runtime.serialize関数を使用して、OpenVINO IR モデルをシリアル化します。

量子化の精度テストで検証データローダーを再利用します。そのため、nncf.Dataset オブジェクトにラップし、入力テンソルのみを取得する変換関数を定義する必要があります。

import nncf # noqa: F811

def transform_fn(data_item):

"""

Quantization transform function. Extracts and preprocess input data from dataloader item for quantization.

Parameters:

data_item: Tuple with data item produced by DataLoader during iteration

Returns:

input_tensor: Input data for quantization

"""

img = data_item[0].numpy()

input_tensor = prepare_input_tensor(img)

return input_tensor

quantization_dataset = nncf.Dataset(dataloader, transform_fn)

INFO:nncf:NNCF initialized successfully. Supported frameworks detected: torch, tensorflow, onnx, openvino

nncf.quantize 関数は、モデルの量子化のインターフェイスを提供します。OpenVINO モデルのインスタンスと量子化データセットが必要です。オプションで、量子化プロセスの追加パラメーター (量子化のサンプル数、プリセット、無視される範囲など) を提供できます。YOLOv7 モデルには、活性化の非対称量子化を必要とする非 ReLU 活性化関数が含まれています。さらに良い結果を得るため、mixed 量子化プリセットを使用します。これは、重みの対称量子化とアクティベーションの非対称量子化を提供します。

quantized_model = nncf.quantize(model, quantization_dataset, preset=nncf.QuantizationPreset.MIXED)

ov.save_model(quantized_model, 'model/yolov7-tiny_int8.xml')

2024-02-09 23:58:09.840364: I tensorflow/core/util/port.cc:110] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable TF_ENABLE_ONEDNN_OPTS=0. 2024-02-09 23:58:09.872358: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations. To enable the following instructions: AVX2 AVX512F AVX512_VNNI FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-02-09 23:58:10.416753: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

Output()

Output()

/opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-609/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages/nncf/experimental/tensor/tensor.py:84: RuntimeWarning: invalid value encountered in multiply

return Tensor(self.data * unwrap_tensor_data(other))

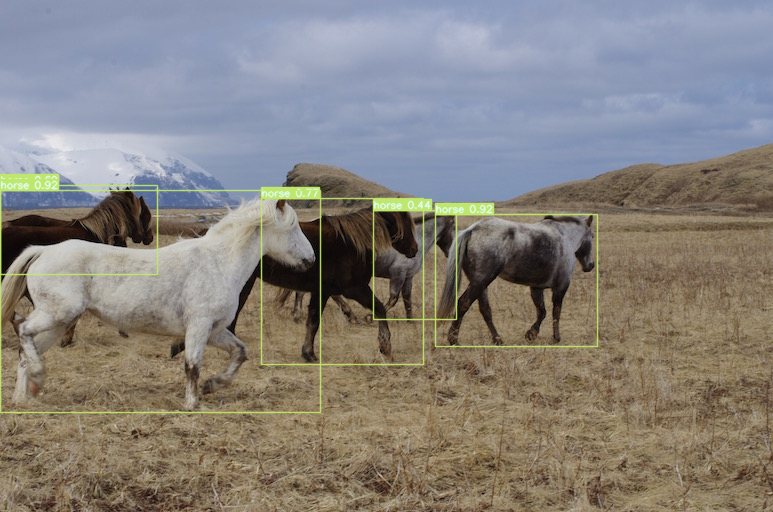

量子化モデルの推論を検証¶

device

Dropdown(description='Device:', index=1, options=('CPU', 'AUTO'), value='AUTO')

int8_compiled_model = core.compile_model(quantized_model, device.value)

boxes, image, input_shape = detect(int8_compiled_model, 'inference/images/horses.jpg')

image_with_boxes = draw_boxes(boxes[0], input_shape, image, NAMES, COLORS)

Image.fromarray(image_with_boxes)

量子化されたモデルの精度を検証¶

int8_result = test(data=data, model=int8_compiled_model, dataloader=dataloader, names=NAMES)

0%| | 0/5000 [00:00<?, ?it/s]

mp, mr, map50, map, maps, num_images, labels = int8_result

# Print results

s = ('%20s' + '%12s' * 6) % ('Class', 'Images', 'Labels', 'Precision', 'Recall', 'mAP@.5', 'mAP@.5:.95')

print(s)

pf = '%20s' + '%12i' * 2 + '%12.3g' * 4 # print format

print(pf % ('all', num_images, labels, mp, mr, map50, map))

Class Images Labels Precision Recall mAP@.5 mAP@.5:.95

all 5000 36335 0.634 0.509 0.54 0.353

ご覧のとおり、量子化後にモデルの精度がわずかに変化しました。ただし、出力画像を見ると、これらの変更は重要ではありません。

元のモデルと量子化モデルのパフォーマンスを比較¶

最後に、OpenVINO Benchmark ツールを使用して、FP32 と INT8 モデルの推論パフォーマンスを測定します。

注: より正確なパフォーマンスを得るには、他のアプリケーションを閉じて、ターミナル/コマンドプロンプトで

benchmark_appを実行することを推奨します。benchmark_app -m model.xml -d CPUを実行して、CPU で非同期推論のベンチマークを 1 分間実行します。GPU でベンチマークを行うには、CPUをGPUに変更します。benchmark_app --helpを実行すると、すべてのコマンドライン・オプションが表示されます。

device

Dropdown(description='Device:', index=1, options=('CPU', 'AUTO'), value='AUTO')

# Inference FP32 model (OpenVINO IR)

!benchmark_app -m model/yolov7-tiny.xml -d $device.value -api async

[Step 1/11] Parsing and validating input arguments

[ INFO ] Parsing input parameters

[Step 2/11] Loading OpenVINO Runtime

[ WARNING ] Default duration 120 seconds is used for unknown device AUTO

[ INFO ] OpenVINO:

[ INFO ] Build ................................. 2023.3.0-13775-ceeafaf64f3-releases/2023/3

[ INFO ]

[ INFO ] Device info:

[ INFO ] AUTO

[ INFO ] Build ................................. 2023.3.0-13775-ceeafaf64f3-releases/2023/3

[ INFO ]

[ INFO ]

[Step 3/11] Setting device configuration

[ WARNING ] Performance hint was not explicitly specified in command line. Device(AUTO) performance hint will be set to PerformanceMode.THROUGHPUT.

[Step 4/11] Reading model files

[ INFO ] Loading model files

[ INFO ] Read model took 13.39 ms

[ INFO ] Original model I/O parameters:

[ INFO ] Model inputs:

[ INFO ] images (node: images) : f32 / [...] / [1,3,640,640]

[ INFO ] Model outputs:

[ INFO ] output (node: output) : f32 / [...] / [1,25200,85]

[Step 5/11] Resizing model to match image sizes and given batch

[ INFO ] Model batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model inputs:

[ INFO ] images (node: images) : u8 / [N,C,H,W] / [1,3,640,640]

[ INFO ] Model outputs:

[ INFO ] output (node: output) : f32 / [...] / [1,25200,85]

[Step 7/11] Loading the model to the device

[ INFO ] Compile model took 272.70 ms

[Step 8/11] Querying optimal runtime parameters

[ INFO ] Model:

[ INFO ] NETWORK_NAME: torch_jit

[ INFO ] EXECUTION_DEVICES: ['CPU']

[ INFO ] PERFORMANCE_HINT: PerformanceMode.THROUGHPUT

[ INFO ] OPTIMAL_NUMBER_OF_INFER_REQUESTS: 6

[ INFO ] MULTI_DEVICE_PRIORITIES: CPU

[ INFO ] CPU:

[ INFO ] AFFINITY: Affinity.CORE

[ INFO ] CPU_DENORMALS_OPTIMIZATION: False

[ INFO ] CPU_SPARSE_WEIGHTS_DECOMPRESSION_RATE: 1.0

[ INFO ] ENABLE_CPU_PINNING: True

[ INFO ] ENABLE_HYPER_THREADING: True

[ INFO ] EXECUTION_DEVICES: ['CPU']

[ INFO ] EXECUTION_MODE_HINT: ExecutionMode.PERFORMANCE

[ INFO ] INFERENCE_NUM_THREADS: 24

[ INFO ] INFERENCE_PRECISION_HINT: <Type: 'float32'>

[ INFO ] NETWORK_NAME: torch_jit

[ INFO ] NUM_STREAMS: 6

[ INFO ] OPTIMAL_NUMBER_OF_INFER_REQUESTS: 6

[ INFO ] PERFORMANCE_HINT: THROUGHPUT

[ INFO ] PERFORMANCE_HINT_NUM_REQUESTS: 0

[ INFO ] PERF_COUNT: NO

[ INFO ] SCHEDULING_CORE_TYPE: SchedulingCoreType.ANY_CORE

[ INFO ] MODEL_PRIORITY: Priority.MEDIUM

[ INFO ] LOADED_FROM_CACHE: False

[Step 9/11] Creating infer requests and preparing input tensors

[ WARNING ] No input files were given for input 'images'!. This input will be filled with random values!

[ INFO ] Fill input 'images' with random values

[Step 10/11] Measuring performance (Start inference asynchronously, 6 inference requests, limits: 120000 ms duration)

[ INFO ] Benchmarking in inference only mode (inputs filling are not included in measurement loop).

[ INFO ] First inference took 43.02 ms

[Step 11/11] Dumping statistics report

[ INFO ] Execution Devices:['CPU']

[ INFO ] Count: 11586 iterations

[ INFO ] Duration: 120047.32 ms

[ INFO ] Latency:

[ INFO ] Median: 61.86 ms

[ INFO ] Average: 62.03 ms

[ INFO ] Min: 44.24 ms

[ INFO ] Max: 86.89 ms

[ INFO ] Throughput: 96.51 FPS

# Inference INT8 model (OpenVINO IR)

!benchmark_app -m model/yolov7-tiny_int8.xml -d $device.value -api async

[Step 1/11] Parsing and validating input arguments

[ INFO ] Parsing input parameters

[Step 2/11] Loading OpenVINO Runtime

[ WARNING ] Default duration 120 seconds is used for unknown device AUTO

[ INFO ] OpenVINO:

[ INFO ] Build ................................. 2023.3.0-13775-ceeafaf64f3-releases/2023/3

[ INFO ]

[ INFO ] Device info:

[ INFO ] AUTO

[ INFO ] Build ................................. 2023.3.0-13775-ceeafaf64f3-releases/2023/3

[ INFO ]

[ INFO ]

[Step 3/11] Setting device configuration

[ WARNING ] Performance hint was not explicitly specified in command line. Device(AUTO) performance hint will be set to PerformanceMode.THROUGHPUT.

[Step 4/11] Reading model files

[ INFO ] Loading model files

[ INFO ] Read model took 22.28 ms

[ INFO ] Original model I/O parameters:

[ INFO ] Model inputs:

[ INFO ] images (node: images) : f32 / [...] / [1,3,640,640]

[ INFO ] Model outputs:

[ INFO ] output (node: output) : f32 / [...] / [1,25200,85]

[Step 5/11] Resizing model to match image sizes and given batch

[ INFO ] Model batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model inputs:

[ INFO ] images (node: images) : u8 / [N,C,H,W] / [1,3,640,640]

[ INFO ] Model outputs:

[ INFO ] output (node: output) : f32 / [...] / [1,25200,85]

[Step 7/11] Loading the model to the device

[ INFO ] Compile model took 489.58 ms

[Step 8/11] Querying optimal runtime parameters

[ INFO ] Model:

[ INFO ] NETWORK_NAME: torch_jit

[ INFO ] EXECUTION_DEVICES: ['CPU']

[ INFO ] PERFORMANCE_HINT: PerformanceMode.THROUGHPUT

[ INFO ] OPTIMAL_NUMBER_OF_INFER_REQUESTS: 6

[ INFO ] MULTI_DEVICE_PRIORITIES: CPU

[ INFO ] CPU:

[ INFO ] AFFINITY: Affinity.CORE

[ INFO ] CPU_DENORMALS_OPTIMIZATION: False

[ INFO ] CPU_SPARSE_WEIGHTS_DECOMPRESSION_RATE: 1.0

[ INFO ] ENABLE_CPU_PINNING: True

[ INFO ] ENABLE_HYPER_THREADING: True

[ INFO ] EXECUTION_DEVICES: ['CPU']

[ INFO ] EXECUTION_MODE_HINT: ExecutionMode.PERFORMANCE

[ INFO ] INFERENCE_NUM_THREADS: 24

[ INFO ] INFERENCE_PRECISION_HINT: <Type: 'float32'>

[ INFO ] NETWORK_NAME: torch_jit

[ INFO ] NUM_STREAMS: 6

[ INFO ] OPTIMAL_NUMBER_OF_INFER_REQUESTS: 6

[ INFO ] PERFORMANCE_HINT: THROUGHPUT

[ INFO ] PERFORMANCE_HINT_NUM_REQUESTS: 0

[ INFO ] PERF_COUNT: NO

[ INFO ] SCHEDULING_CORE_TYPE: SchedulingCoreType.ANY_CORE

[ INFO ] MODEL_PRIORITY: Priority.MEDIUM

[ INFO ] LOADED_FROM_CACHE: False

[Step 9/11] Creating infer requests and preparing input tensors

[ WARNING ] No input files were given for input 'images'!. This input will be filled with random values!

[ INFO ] Fill input 'images' with random values

[Step 10/11] Measuring performance (Start inference asynchronously, 6 inference requests, limits: 120000 ms duration)

[ INFO ] Benchmarking in inference only mode (inputs filling are not included in measurement loop).

[ INFO ] First inference took 25.89 ms

[Step 11/11] Dumping statistics report

[ INFO ] Execution Devices:['CPU']

[ INFO ] Count: 33210 iterations

[ INFO ] Duration: 120019.44 ms

[ INFO ] Latency:

[ INFO ] Median: 21.49 ms

[ INFO ] Average: 21.57 ms

[ INFO ] Min: 15.74 ms

[ INFO ] Max: 43.27 ms

[ INFO ] Throughput: 276.71 FPS