OpenVINO™ による非同期推論¶

この Jupyter ノートブックはオンラインで起動でき、ブラウザーのウィンドウで対話型環境を開きます。ローカルにインストールすることもできます。次のオプションのいずれかを選択します。

このノートブックでは、OpenVINO で非同期実行に非同期 API を使用する方法を示します。

OpenVINO ランタイムは、同期モードまたは非同期モードの推論をサポートします。非同期 API の主な利点は、デバイスが推論でビジー状態のときに、アプリケーションが現在の推論が完了するのを待つのではなく、他のタスク (例えば、入力の入力や他の要求のスケジュール設定) を並行して実行できることです。

目次¶

インポート¶

%pip install -q "openvino>=2023.1.0"

%pip install -q opencv-python matplotlib

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

import cv2

import time

import numpy as np

import openvino as ov

from IPython import display

import matplotlib.pyplot as plt

# Fetch the notebook utils script from the openvino_notebooks repo

import urllib.request

urllib.request.urlretrieve(

url='https://raw.githubusercontent.com/openvinotoolkit/openvino_notebooks/main/notebooks/utils/notebook_utils.py',

filename='notebook_utils.py'

)

import notebook_utils as utils

モデルとデータ処理の準備¶

テストモデルをダウンロード¶

テストを開始するには、OpenVINO のOpen Model Zoo の事前トレーニング済みモデルを使用します。この場合、モデルが実行され、ビデオの各フレームで人物が検出されます。

# directory where model will be downloaded

base_model_dir = "model"

# model name as named in Open Model Zoo

model_name = "person-detection-0202"

precision = "FP16"

model_path = (

f"model/intel/{model_name}/{precision}/{model_name}.xml"

)

download_command = f"omz_downloader " \

f"--name {model_name} " \

f"--precision {precision} " \

f"--output_dir {base_model_dir} " \

f"--cache_dir {base_model_dir}"

! $download_command

################|| Downloading person-detection-0202 ||################

========== Downloading model/intel/person-detection-0202/FP16/person-detection-0202.xml

... 12%, 32 KB, 1341 KB/s, 0 seconds passed

... 25%, 64 KB, 1341 KB/s, 0 seconds passed

... 38%, 96 KB, 1143 KB/s, 0 seconds passed

... 51%, 128 KB, 1326 KB/s, 0 seconds passed

... 64%, 160 KB, 1323 KB/s, 0 seconds passed

... 77%, 192 KB, 1444 KB/s, 0 seconds passed

... 89%, 224 KB, 1678 KB/s, 0 seconds passed

... 100%, 248 KB, 1859 KB/s, 0 seconds passed

========== Downloading model/intel/person-detection-0202/FP16/person-detection-0202.bin

... 0%, 32 KB, 1301 KB/s, 0 seconds passed

... 1%, 64 KB, 1298 KB/s, 0 seconds passed

... 2%, 96 KB, 1907 KB/s, 0 seconds passed

... 3%, 128 KB, 1722 KB/s, 0 seconds passed

... 4%, 160 KB, 2133 KB/s, 0 seconds passed

... 5%, 192 KB, 2234 KB/s, 0 seconds passed

... 6%, 224 KB, 2577 KB/s, 0 seconds passed

... 7%, 256 KB, 2312 KB/s, 0 seconds passed

... 8%, 288 KB, 2581 KB/s, 0 seconds passed

... 9%, 320 KB, 2614 KB/s, 0 seconds passed

... 9%, 352 KB, 2855 KB/s, 0 seconds passed

... 10%, 384 KB, 2608 KB/s, 0 seconds passed

... 11%, 416 KB, 2811 KB/s, 0 seconds passed

... 12%, 448 KB, 2817 KB/s, 0 seconds passed

... 13%, 480 KB, 2999 KB/s, 0 seconds passed

... 14%, 512 KB, 2785 KB/s, 0 seconds passed

... 15%, 544 KB, 2949 KB/s, 0 seconds passed

... 16%, 576 KB, 2947 KB/s, 0 seconds passed

... 17%, 608 KB, 3097 KB/s, 0 seconds passed

... 18%, 640 KB, 2903 KB/s, 0 seconds passed

... 18%, 672 KB, 3035 KB/s, 0 seconds passed

... 19%, 704 KB, 3035 KB/s, 0 seconds passed

... 20%, 736 KB, 3158 KB/s, 0 seconds passed

... 21%, 768 KB, 2990 KB/s, 0 seconds passed

... 22%, 800 KB, 3107 KB/s, 0 seconds passed

... 23%, 832 KB, 3100 KB/s, 0 seconds passed

... 24%, 864 KB, 3209 KB/s, 0 seconds passed

... 25%, 896 KB, 3055 KB/s, 0 seconds passed

... 26%, 928 KB, 3153 KB/s, 0 seconds passed

... 27%, 960 KB, 3148 KB/s, 0 seconds passed

... 27%, 992 KB, 3242 KB/s, 0 seconds passed

... 28%, 1024 KB, 3104 KB/s, 0 seconds passed

... 29%, 1056 KB, 3191 KB/s, 0 seconds passed

... 30%, 1088 KB, 3186 KB/s, 0 seconds passed

... 31%, 1120 KB, 3271 KB/s, 0 seconds passed

... 32%, 1152 KB, 3144 KB/s, 0 seconds passed

... 33%, 1184 KB, 3225 KB/s, 0 seconds passed

... 34%, 1216 KB, 3217 KB/s, 0 seconds passed

... 35%, 1248 KB, 3293 KB/s, 0 seconds passed

... 36%, 1280 KB, 3177 KB/s, 0 seconds passed

... 36%, 1312 KB, 3250 KB/s, 0 seconds passed

... 37%, 1344 KB, 3243 KB/s, 0 seconds passed

... 38%, 1376 KB, 3311 KB/s, 0 seconds passed

... 39%, 1408 KB, 3204 KB/s, 0 seconds passed

... 40%, 1440 KB, 3198 KB/s, 0 seconds passed

... 41%, 1472 KB, 3260 KB/s, 0 seconds passed

... 42%, 1504 KB, 3324 KB/s, 0 seconds passed

... 43%, 1536 KB, 3225 KB/s, 0 seconds passed

... 44%, 1568 KB, 3218 KB/s, 0 seconds passed

... 45%, 1600 KB, 3279 KB/s, 0 seconds passed

... 45%, 1632 KB, 3339 KB/s, 0 seconds passed

... 46%, 1664 KB, 3246 KB/s, 0 seconds passed

... 47%, 1696 KB, 3234 KB/s, 0 seconds passed

... 48%, 1728 KB, 3291 KB/s, 0 seconds passed

... 49%, 1760 KB, 3349 KB/s, 0 seconds passed

... 50%, 1792 KB, 3263 KB/s, 0 seconds passed

... 51%, 1824 KB, 3249 KB/s, 0 seconds passed

... 52%, 1856 KB, 3303 KB/s, 0 seconds passed

... 53%, 1888 KB, 3357 KB/s, 0 seconds passed

... 54%, 1920 KB, 3280 KB/s, 0 seconds passed

... 54%, 1952 KB, 3328 KB/s, 0 seconds passed

... 55%, 1984 KB, 3314 KB/s, 0 seconds passed

... 56%, 2016 KB, 3365 KB/s, 0 seconds passed

... 57%, 2048 KB, 3294 KB/s, 0 seconds passed

... 58%, 2080 KB, 3337 KB/s, 0 seconds passed

... 59%, 2112 KB, 3325 KB/s, 0 seconds passed

... 60%, 2144 KB, 3372 KB/s, 0 seconds passed

... 61%, 2176 KB, 3306 KB/s, 0 seconds passed

... 62%, 2208 KB, 3345 KB/s, 0 seconds passed

... 63%, 2240 KB, 3335 KB/s, 0 seconds passed

... 64%, 2272 KB, 3379 KB/s, 0 seconds passed

... 64%, 2304 KB, 3316 KB/s, 0 seconds passed

... 65%, 2336 KB, 3351 KB/s, 0 seconds passed

... 66%, 2368 KB, 3343 KB/s, 0 seconds passed

... 67%, 2400 KB, 3385 KB/s, 0 seconds passed

... 68%, 2432 KB, 3322 KB/s, 0 seconds passed

... 69%, 2464 KB, 3312 KB/s, 0 seconds passed

... 70%, 2496 KB, 3349 KB/s, 0 seconds passed

... 71%, 2528 KB, 3390 KB/s, 0 seconds passed

... 72%, 2560 KB, 3331 KB/s, 0 seconds passed

... 73%, 2592 KB, 3322 KB/s, 0 seconds passed

... 73%, 2624 KB, 3356 KB/s, 0 seconds passed

... 74%, 2656 KB, 3395 KB/s, 0 seconds passed

... 75%, 2688 KB, 3338 KB/s, 0 seconds passed

... 76%, 2720 KB, 3328 KB/s, 0 seconds passed

... 77%, 2752 KB, 3363 KB/s, 0 seconds passed

... 78%, 2784 KB, 3399 KB/s, 0 seconds passed

... 79%, 2816 KB, 3344 KB/s, 0 seconds passed

... 80%, 2848 KB, 3335 KB/s, 0 seconds passed

... 81%, 2880 KB, 3369 KB/s, 0 seconds passed

... 82%, 2912 KB, 3321 KB/s, 0 seconds passed

... 82%, 2944 KB, 3352 KB/s, 0 seconds passed

... 83%, 2976 KB, 3342 KB/s, 0 seconds passed

... 84%, 3008 KB, 3374 KB/s, 0 seconds passed

... 85%, 3040 KB, 3407 KB/s, 0 seconds passed

... 86%, 3072 KB, 3360 KB/s, 0 seconds passed

... 87%, 3104 KB, 3351 KB/s, 0 seconds passed

... 88%, 3136 KB, 3380 KB/s, 0 seconds passed

... 89%, 3168 KB, 3411 KB/s, 0 seconds passed

... 90%, 3200 KB, 3364 KB/s, 0 seconds passed

... 91%, 3232 KB, 3357 KB/s, 0 seconds passed

... 91%, 3264 KB, 3384 KB/s, 0 seconds passed

... 92%, 3296 KB, 3342 KB/s, 0 seconds passed

... 93%, 3328 KB, 3368 KB/s, 0 seconds passed

... 94%, 3360 KB, 3358 KB/s, 1 seconds passed

... 95%, 3392 KB, 3388 KB/s, 1 seconds passed

... 96%, 3424 KB, 3346 KB/s, 1 seconds passed

... 97%, 3456 KB, 3372 KB/s, 1 seconds passed

... 98%, 3488 KB, 3363 KB/s, 1 seconds passed

... 99%, 3520 KB, 3392 KB/s, 1 seconds passed

... 100%, 3549 KB, 3419 KB/s, 1 seconds passed

モデルのロード¶

# initialize OpenVINO runtime

core = ov.Core()

# read the network and corresponding weights from file

model = core.read_model(model=model_path)

# compile the model for the CPU (you can choose manually CPU, GPU etc.)

# or let the engine choose the best available device (AUTO)

compiled_model = core.compile_model(model=model, device_name="CPU")

# get input node

input_layer_ir = model.input(0)

N, C, H, W = input_layer_ir.shape

shape = (H, W)

データ処理のための関数を作成¶

def preprocess(image):

"""

Define the preprocess function for input data

:param: image: the orignal input frame

:returns:

resized_image: the image processed

"""

resized_image = cv2.resize(image, shape)

resized_image = cv2.cvtColor(np.array(resized_image), cv2.COLOR_BGR2RGB)

resized_image = resized_image.transpose((2, 0, 1))

resized_image = np.expand_dims(resized_image, axis=0).astype(np.float32)

return resized_image

def postprocess(result, image, fps):

"""

Define the postprocess function for output data

:param: result: the inference results

image: the orignal input frame

fps: average throughput calculated for each frame

:returns:

image: the image with bounding box and fps message

"""

detections = result.reshape(-1, 7)

for i, detection in enumerate(detections):

_, image_id, confidence, xmin, ymin, xmax, ymax = detection

if confidence > 0.5:

xmin = int(max((xmin * image.shape[1]), 10))

ymin = int(max((ymin * image.shape[0]), 10))

xmax = int(min((xmax * image.shape[1]), image.shape[1] - 10))

ymax = int(min((ymax * image.shape[0]), image.shape[0] - 10))

cv2.rectangle(image, (xmin, ymin), (xmax, ymax), (0, 255, 0), 2)

cv2.putText(image, str(round(fps, 2)) + " fps", (5, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 3)

return image

テストビデオを入手¶

video_path = 'https://storage.openvinotoolkit.org/repositories/openvino_notebooks/data/data/video/CEO%20Pat%20Gelsinger%20on%20Leading%20Intel.mp4'

ビデオ処理のスループットを向上させる方法¶

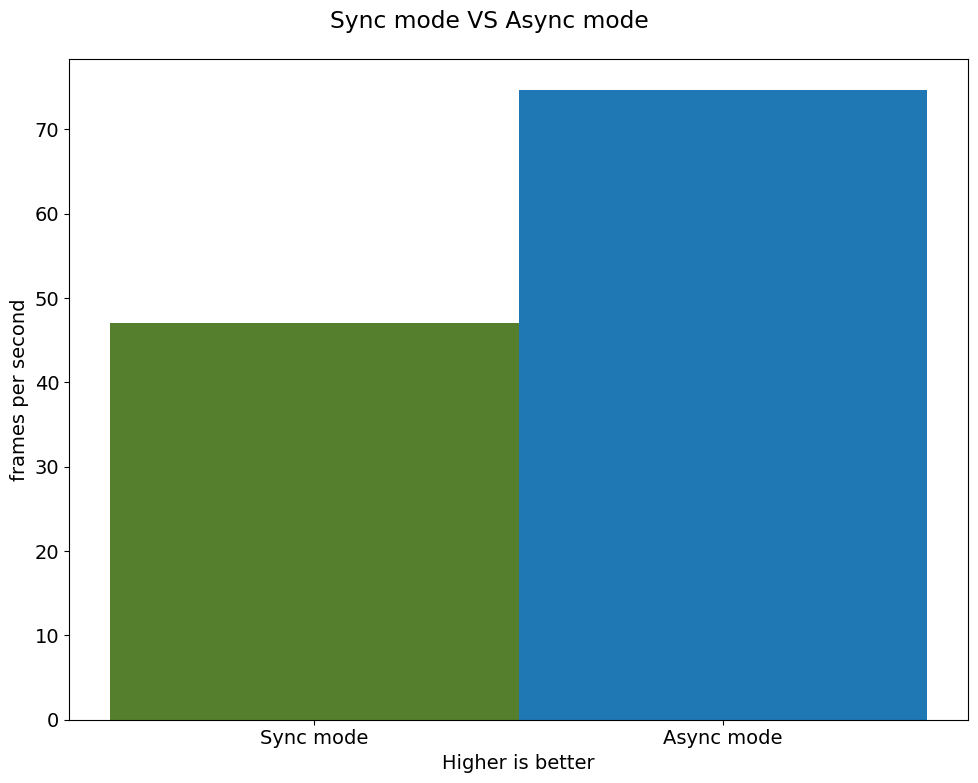

以下では、同期ベースと非同期ベースのアプローチのパフォーマンスを比較します。

同期モード (デフォルト)¶

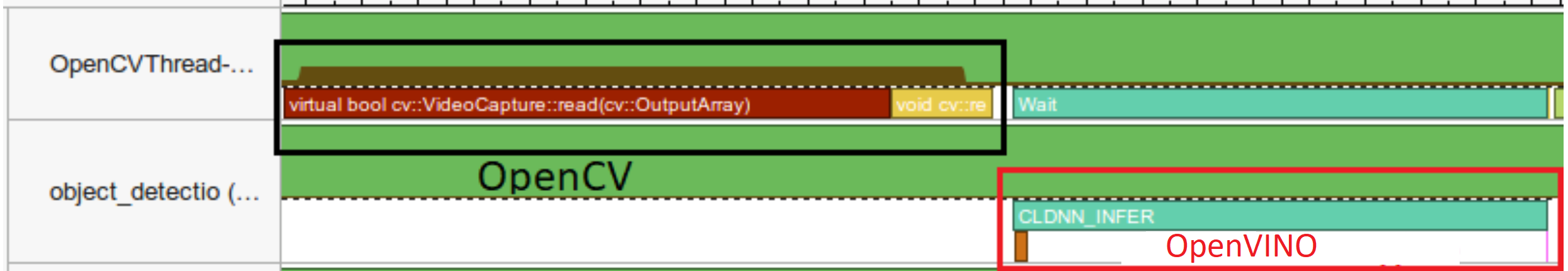

デフォルトのアプローチでビデオ処理がどのように機能するかを見てみましょう。同期アプローチを使用すると、フレームは OpenCV でキャプチャーされ、すぐに処理されます。

描画¶

while(true) {

// capture frame

// populate CURRENT InferRequest

// Infer CURRENT InferRequest

//this call is synchronous

// display CURRENT result

}

```

def sync_api(source, flip, fps, use_popup, skip_first_frames):

"""

Define the main function for video processing in sync mode

:param: source: the video path or the ID of your webcam

:returns:

sync_fps: the inference throughput in sync mode

"""

frame_number = 0

infer_request = compiled_model.create_infer_request()

player = None

try:

# Create a video player

player = utils.VideoPlayer(source, flip=flip, fps=fps, skip_first_frames=skip_first_frames)

# Start capturing

start_time = time.time()

player.start()

if use_popup:

title = "Press ESC to Exit"

cv2.namedWindow(title, cv2.WINDOW_GUI_NORMAL | cv2.WINDOW_AUTOSIZE)

while True:

frame = player.next()

if frame is None:

print("Source ended")

break

resized_frame = preprocess(frame)

infer_request.set_tensor(input_layer_ir, ov.Tensor(resized_frame))

# Start the inference request in synchronous mode

infer_request.infer()

res = infer_request.get_output_tensor(0).data

stop_time = time.time()

total_time = stop_time - start_time

frame_number = frame_number + 1

sync_fps = frame_number / total_time

frame = postprocess(res, frame, sync_fps)

# Display the results

if use_popup:

cv2.imshow(title, frame)

key = cv2.waitKey(1)

# escape = 27

if key == 27:

break

else:

# Encode numpy array to jpg

_, encoded_img = cv2.imencode(".jpg", frame, params=[cv2.IMWRITE_JPEG_QUALITY, 90])

# Create IPython image

i = display.Image(data=encoded_img)

# Display the image in this notebook

display.clear_output(wait=True)

display.display(i)

# ctrl-c

except KeyboardInterrupt:

print("Interrupted")

# Any different error

except RuntimeError as e:

print(e)

finally:

if use_popup:

cv2.destroyAllWindows()

if player is not None:

# stop capturing

player.stop()

return sync_fps

同期モードでパフォーマンス・テスト¶

sync_fps = sync_api(source=video_path, flip=False, fps=30, use_popup=False, skip_first_frames=800)

print(f"average throuput in sync mode: {sync_fps:.2f} fps")

Source ended

average throuput in sync mode: 47.04 fps

非同期モード¶

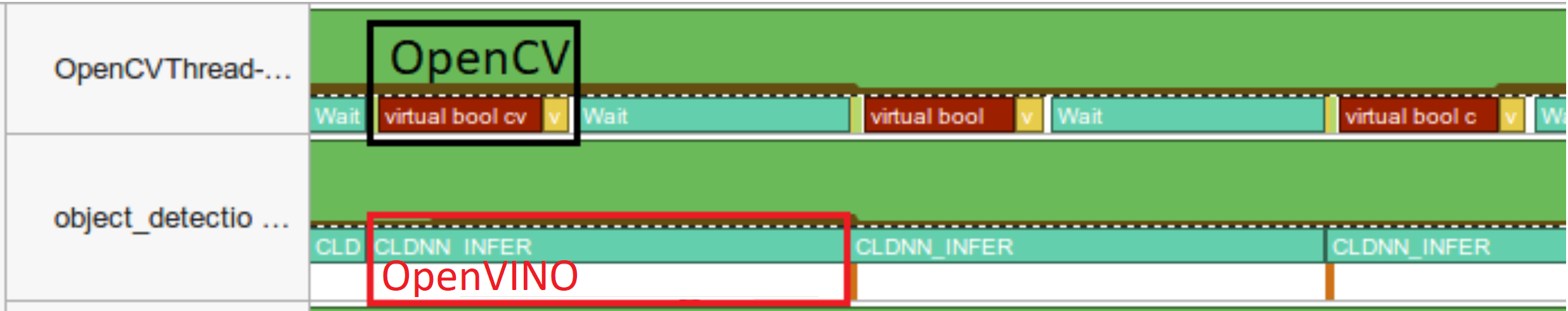

OpenVINO 非同期 API がアプリケーションの全体的なフレームレートをどのように向上できるかを見てみましょう。非同期アプローチの利点は、デバイスが推論でビジーなときに、アプリケーションが現在の推論が最初に完了するのを待機することなく、他の処理 (入力の設定や他の要求のスケジュール設定など) を並行して実行できることです。

描画¶

以下の例では、ビデオデコードに推論が適用されます。したがって、複数の推論要求を保持することが可能であり、現在の要求が処理されている間に、次の要求の入力フレームがキャプチャーされます。これにより、キャプチャーのレイテンシーが隠蔽されるため、全体のフレームレートはステージの合計ではなく、パイプラインの最も遅い部分 (デコードと推論) によってのみ決定されます。

while(true) {

// capture frame

// populate NEXT InferRequest

// start NEXT InferRequest

// this call is async and returns immediately

// wait for the CURRENT InferRequest

// display CURRENT result

// swap CURRENT and NEXT InferRequests

}

def async_api(source, flip, fps, use_popup, skip_first_frames):

"""

Define the main function for video processing in async mode

:param: source: the video path or the ID of your webcam

:returns:

async_fps: the inference throughput in async mode

"""

frame_number = 0

# Create 2 infer requests

curr_request = compiled_model.create_infer_request()

next_request = compiled_model.create_infer_request()

player = None

async_fps = 0

try:

# Create a video player

player = utils.VideoPlayer(source, flip=flip, fps=fps, skip_first_frames=skip_first_frames)

# Start capturing

start_time = time.time()

player.start()

if use_popup:

title = "Press ESC to Exit"

cv2.namedWindow(title, cv2.WINDOW_GUI_NORMAL | cv2.WINDOW_AUTOSIZE)

# Capture CURRENT frame

frame = player.next()

resized_frame = preprocess(frame)

curr_request.set_tensor(input_layer_ir, ov.Tensor(resized_frame))

# Start the CURRENT inference request

curr_request.start_async()

while True:

# Capture NEXT frame

next_frame = player.next()

if next_frame is None:

print("Source ended")

break

resized_frame = preprocess(next_frame)

next_request.set_tensor(input_layer_ir, ov.Tensor(resized_frame))

# Start the NEXT inference request

next_request.start_async()

# Waiting for CURRENT inference result

curr_request.wait()

res = curr_request.get_output_tensor(0).data

stop_time = time.time()

total_time = stop_time - start_time

frame_number = frame_number + 1

async_fps = frame_number / total_time

frame = postprocess(res, frame, async_fps)

# Display the results

if use_popup:

cv2.imshow(title, frame)

key = cv2.waitKey(1)

# escape = 27

if key == 27:

break

else:

# Encode numpy array to jpg

_, encoded_img = cv2.imencode(".jpg", frame, params=[cv2.IMWRITE_JPEG_QUALITY, 90])

# Create IPython image

i = display.Image(data=encoded_img)

# Display the image in this notebook

display.clear_output(wait=True)

display.display(i)

# Swap CURRENT and NEXT frames

frame = next_frame

# Swap CURRENT and NEXT infer requests

curr_request, next_request = next_request, curr_request

# ctrl-c

except KeyboardInterrupt:

print("Interrupted")

# Any different error

except RuntimeError as e:

print(e)

finally:

if use_popup:

cv2.destroyAllWindows()

if player is not None:

# stop capturing

player.stop()

return async_fps

非同期モードでパフォーマンスをテスト¶

async_fps = async_api(source=video_path, flip=False, fps=30, use_popup=False, skip_first_frames=800)

print(f"average throuput in async mode: {async_fps:.2f} fps")

Source ended

average throuput in async mode: 74.61 fps

パフォーマンスを比較¶

width = 0.4

fontsize = 14

plt.rc('font', size=fontsize)

fig, ax = plt.subplots(1, 1, figsize=(10, 8))

rects1 = ax.bar([0], sync_fps, width, color='#557f2d')

rects2 = ax.bar([width], async_fps, width)

ax.set_ylabel("frames per second")

ax.set_xticks([0, width])

ax.set_xticklabels(["Sync mode", "Async mode"])

ax.set_xlabel("Higher is better")

fig.suptitle('Sync mode VS Async mode')

fig.tight_layout()

plt.show()

AsyncInferQueue¶

非同期モード・パイプラインは、AsyncInferQueue ラッパークラスでサポートできます。このクラスは、InferRequest オブジェクト ( “ジョブ” とも呼ばれます) のプールを自動的に生成し、パイプラインのフローを制御する同期メカニズムを提供します。これは、非同期モードで推論要求キューを管理するより簡単です。

コールバックの設定¶

コールバックが設定されていると、推論を終了するジョブは Python 関数を呼び出します。コールバック関数には 2 つの引数が必要です。1 つはコールバックを呼び出す要求で、InferRequest API を提供します。もう 1 つは “ユーザーデータ” と呼ばれ、ランタイム値を渡す可能性を提供します。

def callback(infer_request, info) -> None:

"""

Define the callback function for postprocessing

:param: infer_request: the infer_request object

info: a tuple includes original frame and starts time

:returns:

None

"""

global frame_number

global total_time

global inferqueue_fps

stop_time = time.time()

frame, start_time = info

total_time = stop_time - start_time

frame_number = frame_number + 1

inferqueue_fps = frame_number / total_time

res = infer_request.get_output_tensor(0).data[0]

frame = postprocess(res, frame, inferqueue_fps)

# Encode numpy array to jpg

_, encoded_img = cv2.imencode(".jpg", frame, params=[cv2.IMWRITE_JPEG_QUALITY, 90])

# Create IPython image

i = display.Image(data=encoded_img)

# Display the image in this notebook

display.clear_output(wait=True)

display.display(i)

def inferqueue(source, flip, fps, skip_first_frames) -> None:

"""

Define the main function for video processing with async infer queue

:param: source: the video path or the ID of your webcam

:retuns:

None

"""

# Create infer requests queue

infer_queue = ov.AsyncInferQueue(compiled_model, 2)

infer_queue.set_callback(callback)

player = None

try:

# Create a video player

player = utils.VideoPlayer(source, flip=flip, fps=fps, skip_first_frames=skip_first_frames)

# Start capturing

start_time = time.time()

player.start()

while True:

# Capture frame

frame = player.next()

if frame is None:

print("Source ended")

break

resized_frame = preprocess(frame)

# Start the inference request with async infer queue

infer_queue.start_async({input_layer_ir.any_name: resized_frame}, (frame, start_time))

except KeyboardInterrupt:

print("Interrupted")

# Any different error

except RuntimeError as e:

print(e)

finally:

infer_queue.wait_all()

player.stop()

AsyncInferQueue でパフォーマンスをテスト¶

frame_number = 0

total_time = 0

inferqueue(source=video_path, flip=False, fps=30, skip_first_frames=800)

print(f"average throughput in async mode with async infer queue: {inferqueue_fps:.2f} fps")

average throughput in async mode with async infer queue: 113.01 fps