NNCF を使用した Big Transfer 画像分類モデル量子化パイプライン#

この Jupyter ノートブックは、ローカルへのインストール後にのみ起動できます。

このチュートリアルでは、NNCF を使用して 10 個のクラスラベルを持つ ImageNet データセットのサブセットで微調整される、Big Transfer 画像分類モデルの量子化を示します。ImageNet-21k でトレーニングされた BiT-M-R50x1/1 モデルを使用します。Big Transfer は、大規模な教師付きデータセットで画像分類モデルを事前トレーニングし、特定のターゲットタスクでそれらを効率良く微調整するレシピです。このレシピは、ターゲット・データセットのラベル付きサンプルをほとんど使用しない場合でも、さまざまなタスクで優れたパフォーマンスを実現します。このチュートリアルでは、NNCF でモデルの量子化を実行するため OpenVINO バックエンドを使用します。

目次:

import platform

%pip install -q "tensorflow-macos>=2.5; sys_platform == 'darwin' and platform_machine == 'arm64' and python_version > '3.8'" # macOS M1 and M2

%pip install -q "tensorflow-macos>=2.5,<=2.12.0; sys_platform == 'darwin' and platform_machine == 'arm64' and python_version <= '3.8'" # macOS M1 and M2

%pip install -q "tensorflow>=2.5; sys_platform == 'darwin' and platform_machine != 'arm64' and python_version > '3.8'" # macOS x86

%pip install -q "tensorflow>=2.5,<=2.12.0; sys_platform == 'darwin' and platform_machine != 'arm64' and python_version <= '3.8'" # macOS x86

%pip install -q "tensorflow>=2.5; sys_platform != 'darwin' and python_version > '3.8'"

%pip install -q "tensorflow>=2.5,<=2.12.0; sys_platform != 'darwin' and python_version <= '3.8'"

%pip install -q "openvino>=2024.0.0" "nncf>=2.7.0" "tensorflow-hub>=0.15.0" tf_keras

%pip install -q "scikit-learn>=1.3.2"

if platform.system() != "Windows":

%pip install -q "matplotlib>=3.4" "tensorflow_datasets>=4.9.0"

else:

%pip install -q "matplotlib>=3.4,<3.7" "tensorflow_datasets>=4.9.0<4.9.3"Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.

Note: you may need to restart the kernel to use updated packages.import os

import numpy as np

from pathlib import Path

from openvino.runtime import Core

import openvino as ov

import nncf

import logging

from nncf.common.logging.logger import

set_log_level set_log_level(logging.ERROR)

from sklearn.metrics import accuracy_score

os.environ["TF_USE_LEGACY_KERAS"] = "1"

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

os.environ["TFHUB_CACHE_DIR"] = str(Path("./tfhub_modules").resolve())

import tensorflow as tf

import tensorflow_datasets as tfds

import tensorflow_hub as hub

tfds.core.utils.gcs_utils._is_gcs_disabled = True

os.environ["NO_GCE_CHECK"] = "true"INFO:nncf:NNCF initialized successfully.Supported frameworks detected: torch, tensorflow, onnx, openvinocore = Core()

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

# 上位 5 つのラベルの場合MAX_PREDS = 1

TRAINING_BATCH_SIZE = 128

BATCH_SIZE = 1

IMG_SIZE = (256, 256) # デフォルトの Imagenet 画像サイズ

NUM_CLASSES = 10 # Imagenette データセット

FINE_TUNING_STEPS = 1

LR = 1e-5

MEAN_RGB = (0.485 * 255, 0.456 * 255, 0.406 * 255) # Imagenet データセットから

STDDEV_RGB = (0.229 * 255, 0.224 * 255, 0.225 * 255) # Imagenet データセットからデータセットの準備#

datasets, datasets_info = tfds.load(

"imagenette/160px",

shuffle_files=True,

as_supervised=True,

with_info=True,

read_config=tfds.ReadConfig(shuffle_seed=0),

)

train_ds, validation_ds = datasets["train"], datasets["validation"]2024-07-12 23:29:23.376213: E tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:266] failed call to cuInit: CUDA_ERROR_COMPAT_NOT_SUPPORTED_ON_DEVICE: forward compatibility was attempted on non supported HW

2024-07-12 23:29:23.376437: E tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:312] kernel version 470.182.3 does not match DSO version 470.223.2 -- cannot find working devices in this configurationdef preprocessing(image, label):

image = tf.image.resize(image, IMG_SIZE)

image = tf.cast(image, tf.float32) / 255.0

label = tf.one_hot(label, NUM_CLASSES)

return image, label

train_dataset = train_ds.map(preprocessing,num_parallel_calls=tf.data.experimental.AUTOTUNE).batch(TRAINING_BATCH_SIZE).prefetch(tf.data.experimental.AUTOTUNE)

validation_dataset = (

validation_ds.map(preprocessing,

num_parallel_calls=tf.data.experimental.AUTOTUNE).batch(TRAINING_BATCH_SIZE).prefetch(tf.data.experimental.AUTOTUNE)

)# imagenette のサンプル名とクラスを含むクラスラベル辞書

lbl_dict = dict(

n01440764="tench",

n02102040="English springer",

n02979186="cassette player",

n03000684="chain saw",

n03028079="church",

n03394916="French horn",

n03417042="garbage truck",

n03425413="gas pump",

n03445777="golf ball",

n03888257="parachute",

)

# Imagenette サンプル名インデックス

class_idx_dict = [

"n01440764",

"n02102040",

"n02979186",

"n03000684",

"n03028079",

"n03394916",

"n03417042",

"n03425413",

"n03445777",

"n03888257",

]

def label_func(key):

return lbl_dict[key]データサンプルのプロット#

import matplotlib.pyplot as plt

# データセット情報からクラスラベルを取得

class_labels = datasets_info.features["label"].names

# 例とともにラベルを表示

num_examples_to_display = 4

fig, axes = plt.subplots(nrows=1, ncols=num_examples_to_display, figsize=(10, 5))

for i, (image, label_index) in enumerate(train_ds.take(num_examples_to_display)):

label_name = class_labels[label_index.numpy()]

axes[i].imshow(image.numpy())

axes[i].set_title(f"{label_func(label_name)}")

axes[i].axis("off")

plt.tight_layout()

plt.show()

# データセット情報からクラスラベルを取得

class_labels = datasets_info.features["label"].names

# 例とともにラベルを表示

num_examples_to_display = 4

fig, axes = plt.subplots(nrows=1, ncols=num_examples_to_display, figsize=(10, 5))

for i, (image, label_index) in enumerate(validation_ds.take(num_examples_to_display)):

label_name = class_labels[label_index.numpy()]

axes[i].imshow(image.numpy())

axes[i].set_title(f"{label_func(label_name)}")

axes[i].axis("off")

plt.tight_layout()

plt.show()

モデルの微調整#

# Big Transfer モデルをロード

bit_model_url = "https://www.kaggle.com/models/google/bit/frameworks/TensorFlow2/variations/m-r50x1/versions/1"

bit_m = hub.KerasLayer(bit_model_url, trainable=True)

# 新しいタスクに合わせてモデルをカスタマイズ

model = tf.keras.Sequential([bit_m, tf.keras.layers.Dense(NUM_CLASSES, activation="softmax")])

# モデルをコンパイル

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=LR),

loss="categorical_crossentropy",

metrics=["accuracy"],

)

# モデルを微調整

model.fit(

train_dataset.take(3000),

epochs=FINE_TUNING_STEPS,

validation_data=validation_dataset.take(1000),

)

model.save("./bit_tf_model/", save_format="tf")101/101 [==============================] - 968s 9s/step - loss: 0.5046 - accuracy: 0.8758 - val_loss: 0.0804 - val_accuracy: 0.9660WARNING:absl:Found untraced functions such as _update_step_xla while saving (showing 1 of 1).

(警告: absl: 保存中に _update_step_xla などの追跡されていない関数が見つかりました (1 件中 1 件を表示))

These functions will not be directly callable after loading.

(これらの関数は、ロード後に直接呼び出すことはできません)モデル最適化 (IR) ステップを実行#

ir_path = Path("./bit_ov_model/bit_m_r50x1_1.xml")

if not ir_path.exists():

print("Initiating model optimization..!!!")

ov_model = ov.convert_model("./bit_tf_model")

ov.save_model(ov_model, ir_path)

else:

print(f"IR model {ir_path} already exists.")Initiating model optimization..!!!

(モデルの最適化を開始しています..!!!)TF モデルの計算精度#

tf_model = tf.keras.models.load_model("./bit_tf_model/")

tf_predictions = []

gt_label = []

for _, label in validation_dataset:

for cls_label in label:

l_list = cls_label.numpy().tolist()

gt_label.append(l_list.index(1))

for img_batch, label_batch in validation_dataset:

tf_result_batch = tf_model.predict(img_batch, verbose=0)

for i in range(len(img_batch)):

tf_result = tf_result_batch[i]

tf_result = tf.reshape(tf_result, [-1])

top5_label_idx = np.argsort(tf_result)[-MAX_PREDS::][::-1]

tf_predictions.append(top5_label_idx)

# 精度計算のためリストを NumPy 配列に変換

tf_predictions = np.array(tf_predictions)

gt_label = np.array(gt_label)

tf_acc_score = accuracy_score(tf_predictions, gt_label)OpenVINO モデルの計算精度#

推論するデバイスを選択:

import ipywidgets as widgets

core = ov.Core()

device = widgets.Dropdown(

options=core.available_devices + ["AUTO"],

value="AUTO",

description="Device:",

disabled=False,

)

deviceDropdown(description='Device:', index=1, options=('CPU', 'AUTO'), value='AUTO')ov_fp32_model = core.read_model("./bit_ov_model/bit_m_r50x1_1.xml")

ov_fp32_model.reshape([1, IMG_SIZE[0], IMG_SIZE[1], 3])

# ターゲットデバイスを CPU に設定 (その他のオプション例: AUTO/GPU/dGPU/)

compiled_model = ov.compile_model(ov_fp32_model, device.value)

output = compiled_model.outputs[0]

ov_predictions = []

for img_batch, _ in validation_dataset:

for image in img_batch:

image = tf.expand_dims(image, axis=0)

pred = compiled_model(image)[output]

ov_result = tf.reshape(pred, [-1])

top_label_idx = np.argsort(ov_result)[-MAX_PREDS::][::-1]

ov_predictions.append(top_label_idx)

fp32_acc_score = accuracy_score(ov_predictions, gt_label)NNCF を使用して OpenVINO モデルを量子化#

NNCF を使用したモデルの量子化

NNCF キャリブレーション用の検証サンプルの前処理と準備

OpenVINO FP32 モデルで NNCF 量子化を実行する

量子化された OpenVINO INT8 モデルをシリアル化する

def nncf_preprocessing(image, label):

image = tf.image.resize(image, IMG_SIZE)

image = image - MEAN_RGB

image = image / STDDEV_RGB

return image

val_ds = validation_ds.map(nncf_preprocessing,

num_parallel_calls=tf.data.experimental.AUTOTUNE).batch(1).prefetch(tf.data.experimental.AUTOTUNE)

calibration_dataset = nncf.Dataset(val_ds)

ov_fp32_model = core.read_model("./bit_ov_model/bit_m_r50x1_1.xml")

ov_int8_model = nncf.quantize(ov_fp32_model, calibration_dataset, fast_bias_correction=False)

ov.save_model(ov_int8_model, "./bit_ov_int8_model/bit_m_r50x1_1_ov_int8.xml")Output()Output()量子化モデルの計算精度#

nncf_quantized_model = core.read_model("./bit_ov_int8_model/bit_m_r50x1_1_ov_int8.xml")

nncf_quantized_model.reshape([1, IMG_SIZE[0], IMG_SIZE[1], 3])

# ターゲットデバイスはデフォルトで CPU に設定されています

compiled_model = ov.compile_model(nncf_quantized_model, device.value)

output = compiled_model.outputs[0]

ov_predictions = []

inp_tensor = nncf_quantized_model.inputs[0]

out_tensor = nncf_quantized_model.outputs[0]

for img_batch, _ in validation_dataset:

for image in img_batch:

image = tf.expand_dims(image, axis=0)

pred = compiled_model(image)[output]

ov_result = tf.reshape(pred, [-1])

top_label_idx = np.argsort(ov_result)[-MAX_PREDS::][::-1]

ov_predictions.append(top_label_idx)

int8_acc_score = accuracy_score(ov_predictions, gt_label)FP32 と INT8 の精度を比較#

print(f"Accuracy of the tensorflow model (fp32): {tf_acc_score * 100: .2f}%")

print(f"Accuracy of the OpenVINO optimized model (fp32): {fp32_acc_score * 100: .2f}%")

print(f"Accuracy of the OpenVINO quantized model (int8): {int8_acc_score * 100: .2f}%")

accuracy_drop = fp32_acc_score - int8_acc_score

print(f"Accuracy drop between OV FP32 and INT8 model: {accuracy_drop * 100:.1f}% ")Accuracy of the tensorflow model (fp32): 96.60%

Accuracy of the OpenVINO optimized model (fp32): 96.60%

Accuracy of the OpenVINO quantized model (int8): 96.20%

Accuracy drop between OV FP32 and INT8 model: 0.4%1 枚の画像上の推論結果を比較#

# 検証サンプルへアクセス

sample_idx = 50

vds = datasets["validation"]

if len(vds) > sample_idx:

sample = vds.take(sample_idx + 1).skip(sample_idx).as_numpy_iterator().next()

else:

print("Dataset does not have enough samples...!!!")# 画像データ

sample_data = sample[0]

# ラベル情報

sample_label = sample[1]

# 画像データの前処理

image = tf.image.resize(sample_data, IMG_SIZE)

image = tf.expand_dims(image, axis=0)

image = tf.cast(image, tf.float32) / 255.0

# OpenVINO 推論

def ov_inference(model: ov.Model, image) -> str:

compiled_model = ov.compile_model(model, device.value)

output = compiled_model.outputs[0]

pred = compiled_model(image)[output]

ov_result = tf.reshape(pred, [-1])

pred_label = np.argsort(ov_result)[-MAX_PREDS::][::-1]

return pred_label

# OpenVINO FP32 モデル

ov_fp32_model = core.read_model("./bit_ov_model/bit_m_r50x1_1.xml")

ov_fp32_model.reshape([1, IMG_SIZE[0], IMG_SIZE[1], 3])

# OpenVINO INT8 モデル

ov_int8_model = core.read_model("./bit_ov_int8_model/bit_m_r50x1_1_ov_int8.xml")

ov_int8_model.reshape([1, IMG_SIZE[0], IMG_SIZE[1], 3])

# OpenVINO FP32 モデル推論

ov_fp32_pred_label = ov_inference(ov_fp32_model, image)

print(f"Predicted label for the sample picture by float (fp32) model: {label_func(class_idx_dict[int(ov_fp32_pred_label)])}\n")

# OpenVINO int8 モデル推論

ov_int8_pred_label = ov_inference(ov_int8_model, image)

print(f"Predicted label for the sample picture by qunatized (int8) model: {label_func(class_idx_dict[int(ov_int8_pred_label)])}\n")

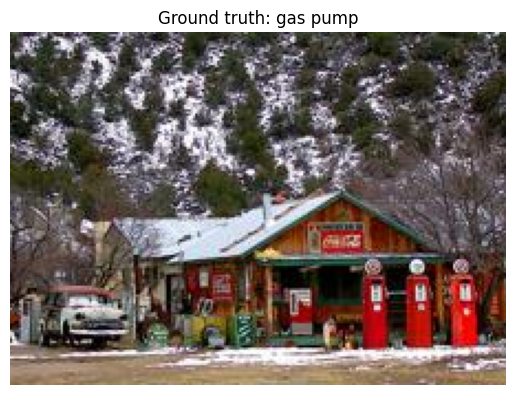

# 画像サンプルをグラウンドトゥルースでプロット

plt.figure()

plt.imshow(sample_data)

plt.title(f"Ground truth: {label_func(class_idx_dict[sample_label])}")

plt.axis("off")

plt.show()Predicted label for the sample picture by float (fp32) model: gas pump

(float (fp32) モデルによるサンプル画像の予測ラベル: ガスポンプ)

Predicted label for the sample picture by qunatized (int8) model: gas pump

(量子化 (int8) モデルによるサンプル画像の予測ラベル: ガソリンスタンド)